Android Project for Visually Impaired Students- Zeynep Uslu

1.PROJECT PLAN

1.1 Project Background and Overview

There are a lot of visually impaired students who would like an easy to use college navigation/support application. . Things that non-visually impaired students have done with ease, such as finding a new classroom, checking our grades in student portal, reading our emails etc. can be challenging for them. Since they require someone else’s assistance in their daily routine, they sometimes drop out of school, or choose not to continue their college education. My goal in creating this application is (to provide an easy to use application that will provide these resources easily to blind students) preventing this situation by enabling the students go their classrooms, keep up with announcements and grades by themselves. Thus allowing them an easier transition to college.

1.2 Project Scope

This Project is a GPS and voice-over based android application which is designed to assist visually impaired students in Binghamton University. By using this app, these students are going to be able to navigate to classes, keep up with their schedules and get their notifications on blackboard minimizing their need for getting help from someone else. The application requires a connection to a GPS and the internet in order to navigate and display desired results. Specifically included will be support for Bus schedules and locations, classroom navigation, The main parts of the application are Go To..., My Schedule, Blackboard, BMail, Most Used, Shuttle and Classrooms.

1.2.1 How Go To... works?

Go To.. is going to used if users want to go in a new location or try to access their common locations from somewhere new. When they touch the tab, application prompts user for a destination by voice-over system and finds the user's current location. After the route calculation is complete, program going to warn user by stating route is ready to use. Finally, it is going to navigate users wherever they would like to go.

1.2.2 How My Schedule works?

In this section, users' class schedules are going to be stored. My Schedule is also going to have an alarm system to warn the users when a class is about the start. So users can be able to keep up with their schedule and will not miss their classes.

1.2.3 How Blackboard works?

The application is going to be compatible with blackboard so that users are going to be able to follow the new announcement about the exam grades, new assignments, etc. So whenever an update occurs inside of a class file user is going to be warn by voice-over system. However, this situation can be distracting if application starts to speak up in middle of the lecture, exams or in the library. This is is one of the concerns about the project.

1.2.4 How BMail works?

BMail section has nearly identical way of working of Blackboard. Users are going to be warn when they receive an email. They must be able to choose to be warn according to the mail type. For instance, stared mail are more important for users than other mails and user may want to be warned only when those kind of mails arrive to the BMail and neglect the others. Or maybe they prefer not be warned at all. So options must be provided when installing the application.

1.2.5 How Shuttle works?

By means of Shuttle tab, users are going to be able to check the OCCT bus schedules and navigate through the nearest bus stop if they decide to take the bus. This section also gives user to opportunity the select bus stop as a destination so that users do not have to use "Go To..." again.

1.3 Goals My main goal in creating this project is creating an equal opportunity world for disabled students by means of eliminating some of the obstacles which they encounter in daily college life.

1.4 Concerns The first concern about the application is GPS accurracy. Since users are going to need to navigate inside the campus by walking, GPS system must be precise and prompt the voice-over system as users arrive their destination. Another idea for this application is to use a bluetooth system instead of GPS.

The second concern is voice-over system. This needs to work accurately also so that users do not miss their target location.

Another possible problem with application is warnings about updates. Users may not be want to be interrupted by the application while they are in the class, studying etc. Hence users must be given the chpice of limiting these warnings.( A possible solution for that is creating an Announcement section and forward the notifications from Blackboard and Bmail to this section so that users get informed about updates when they open this section.)

1.5 Constraints

1.5.1 Time Frame This project is going to be completed by 8 months. In next 3 months project documentation for the design must be done. And the whole project must be completed by May 16.

1.5.2 Data Migration Although the application is going to be prepared over from the scratch, it is also going to include some existing application for BU students, such as BMail and Blackboard. These parts are needed be implemented and work correctly.

1.5.3 Resources As a beginning, I am going to implement the application in my own android device and control GPS and voice-over system to see if they are working as intented. As platform, I am going to use Android SDK.

1.5.4Peer/User Review It is important that the project is examined by a possible user to see the design mistakes and possible problems better and make a shortage.

2. APPROACH

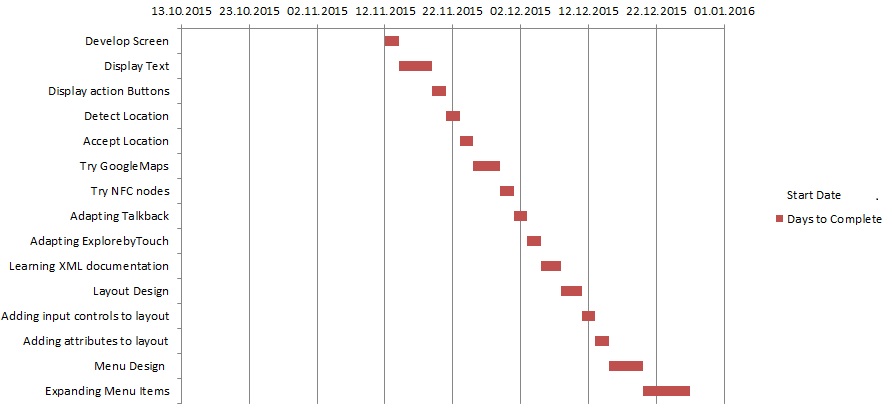

First Semester Gantt Chart

Second Semester Gantt Chart

In this semester, I am going to mainly focus on outdoor navigation, schedule& calendar and bmail.

1) Outdoors

In this scope of the project I am going to access position as latitude and longtitude, reflect the position on Google Maps, create a map fuction which is going to be responsible for giving the directions and finally add it as a menu item.

Outdoors is going to be done by February 23

2)Voice Recognation

Voice recognition provides a talk back feature for the application. It receives voice and converts it to text to generate instructions for the other menu items. There is also a test process for the voice recognition. In case the seperate fuction will not work as intended, I am going to be back to android's own library/Feature "TalkBack".

Voice Recognition is going to be done by February 29

3)Indoors

???

4)Schedule & Calendar

A daily and monthly view for students is going to be created. This feature must also work with the voice recognition in order to mark dates and events to calendar. As another option I may benefit from Google Calendar.

Schedule and Calendar must be done by April 5

5)Bmail

This feature is going to be done by Gmail api.

Bmail is going to be done by April 22

[[1]]

There are the basic steps for completeing project on time and making a progress within a efficient way.

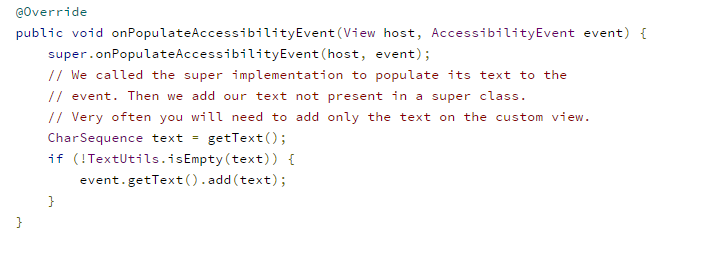

2.1 Interface Design The most crucial standpoints for interface design are speed and easiness. In order to ensure that I have to reduce the amount of manipulation users have to do. Another important point in my application is providing good feeedback to inform users about the process of operations and their location as they navigate. To provide such an interface, I benefit from TalkBack which is an pre-installed screen reader for Android devices, Explore by Touch, also pre-installed specification for Android. How TalkBack Works? When user’s finger navigates explore to explore the screen and come across any element that can be acted on or any block of textthat can be read back to the user the TalkBack kicks in.

2.1.1 Main Menu

To provide optimum accesibiliy to users, I benefited from LinearLayout and ImageButton in android studio. LinearLayout arranges child controls in a single column or a single row. In my application, I divided the screen into equal sized rows and placed the imageButtons(which represent main actions) into the row.

TalkBack Feature

Over the winter break I worked on a seperate project for Raspberry PI which requires us to develop a talk back feature for the androd application of the project. Ahmet and I worked on the application in order to receive users voice and convert to text. When user run the application, it receives the voice as an input and gives the result (text) as output to the screen. I also updated the language options for application. This was needed for receive the user voice correctly.

- The image is going to be uploaded.

Location Feature

This is simple yet necessary part of my project. I created a seperate android application which attains the users current location and returns the latitude and longtitude. I am going to adjust the application according to make it use these values to return more specific locations(such as Bartle library, Engineering Building etc.). The concern of this application is receiving accurent locations. I am going to test my application after the first adjustment and according to the results I am going to make further research to have more precise results.

- The image is going to be uplaoaded.

Meeting With Nazely Kurkjian from SSD

On December 8, professor Jones and I had a meeting with Nazely Kurkjian who works as Adaptive technology analyst in SSD. After introducing us, Professor Jones explain the senior project class and the main goal of it. He also mentioned dual diploma program which I am a student of and explain my project, Accessible Campus in general terms. After that, Nazely ask some questions about the application and I explained by giving some details of the process and technologies that I am using in my project(such as TalkBack, Google Maps etc.) She also made some suggestions for me to improve the project. One of them was detecting stairs and warning the users (which was a brilliant idea yet not noticed). She also informed me about other students projects related to helping disabled students in campus and give me their contact information to discuss for my and their project(After contacting them I am going to add the meeting information as well.). Before we leave, we agreed to keep in touch to evaluate the current form of the application and get feed back from students and SSD. Overall, that was a very productive meeting for me in sense of considering new functionalities to add which I had overlooked before and realizing the new "sources" who can both help me and lead new ideas for the application.

Meeting with OCCT summary

I have meet with Timothy Redband, director of OCCT buses on october third. I asked about what kind of system they used in the buses and learned that they had just started to use an application called ETA Spy, which helps students to track where are the buses and when they are going to arrive the bus station. In order to use this application, they also provide drivers a tablet computer. After I learned that, I asked which platforms/ operating systems the application compatible with to check if my future application will be able to work on their existing system. And as I learned, the application both works for android and IOS and the tablet that drivers use manages to work with android application and it is connected to GPS and the internet while working. Therefore my application will be compatible with their system.

Things That I've done

-Decided on the overall architecture of the project.

-Examined the project with respect to constraints and updated

-I read about similar applications and made Interface search for my application.

-OCCT summary added.

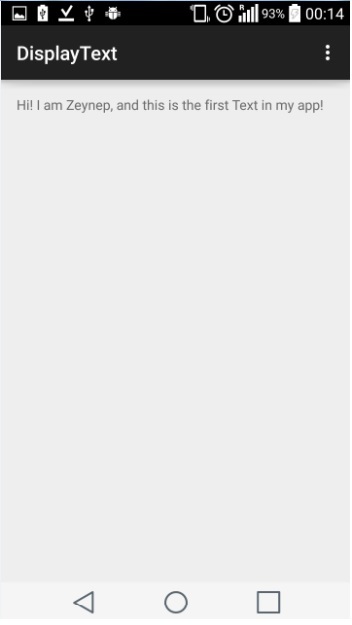

-12-14 November: Screen Developed

-14-15 November: Created string parameters to display text. Text displayed.7

-15-25 November: Researched for android application layouts. Learned the basic implementation differences of layouts.

GridLayout and gridView differences searched and practiced.(This was my first approach for layout but did not worked.)

RelativeLayout and LinearLayout implemented. Parameters such as height, width, orientation has been regulated.

Icons for image buttons are recorded under the drawable file.

ImageButtons parameters such as width, height, id, background etc. has been adjusted.

Google Play studio has been set up.

-26-30 November: Google play APIs have been added and practiced.

Researched for GPS(satellite) and (wireless) Network provider, learn the differences.(In order to save users battery I decided to work with Network Provider.)

Network provider has been practiced (still have some issues). The DETECT LOCATION function works stand alone but continue to work on debugging it as an integrated part of Android App Layout function (Android Studio 1.0.1).

BINGHAMTON UNIVERSITY MAP

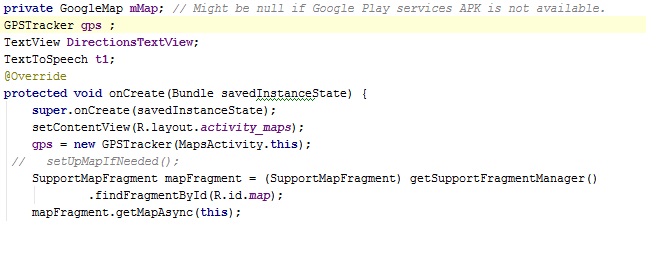

In order to complete the map, I have benefited from Google Maps and Google places API. Before I start developing the app, I register my project to google developer console. This way I can be able to receive the API keys and access them via Android Studio.

As my first step, I added each android and google permission to the Android Manifest.

Second, I created an GoogleMap object, GPS tracker object(the class that I created before for Geolocation app). In OnCreate function I set up the map.

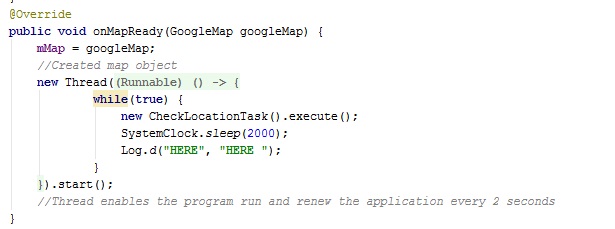

I needed an thread for checking user movements, therefore in onMapReady function I implement a thread which is supposed to update the map every two seconds. This way, application is going to be able to show the movements of the user.

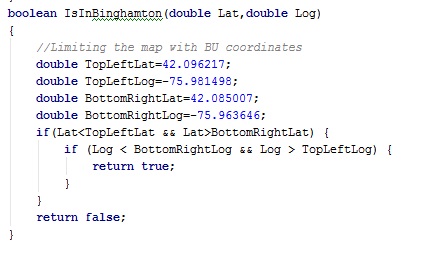

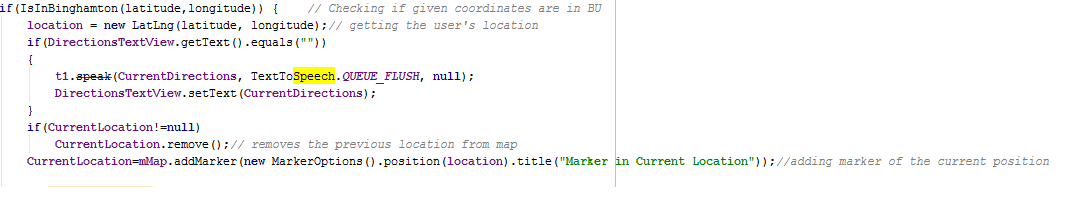

I also limited the map with Binghamto University Brain in order to customize it for school. To do that, I detect the four main points of the school borders. The IsinBinghamton function detects id the user located inside these limits according to that it runs the application.

If user tries to access the map outside the BU, application shows a toast which says "Not in Binghamton". Or else, shows the user's location.

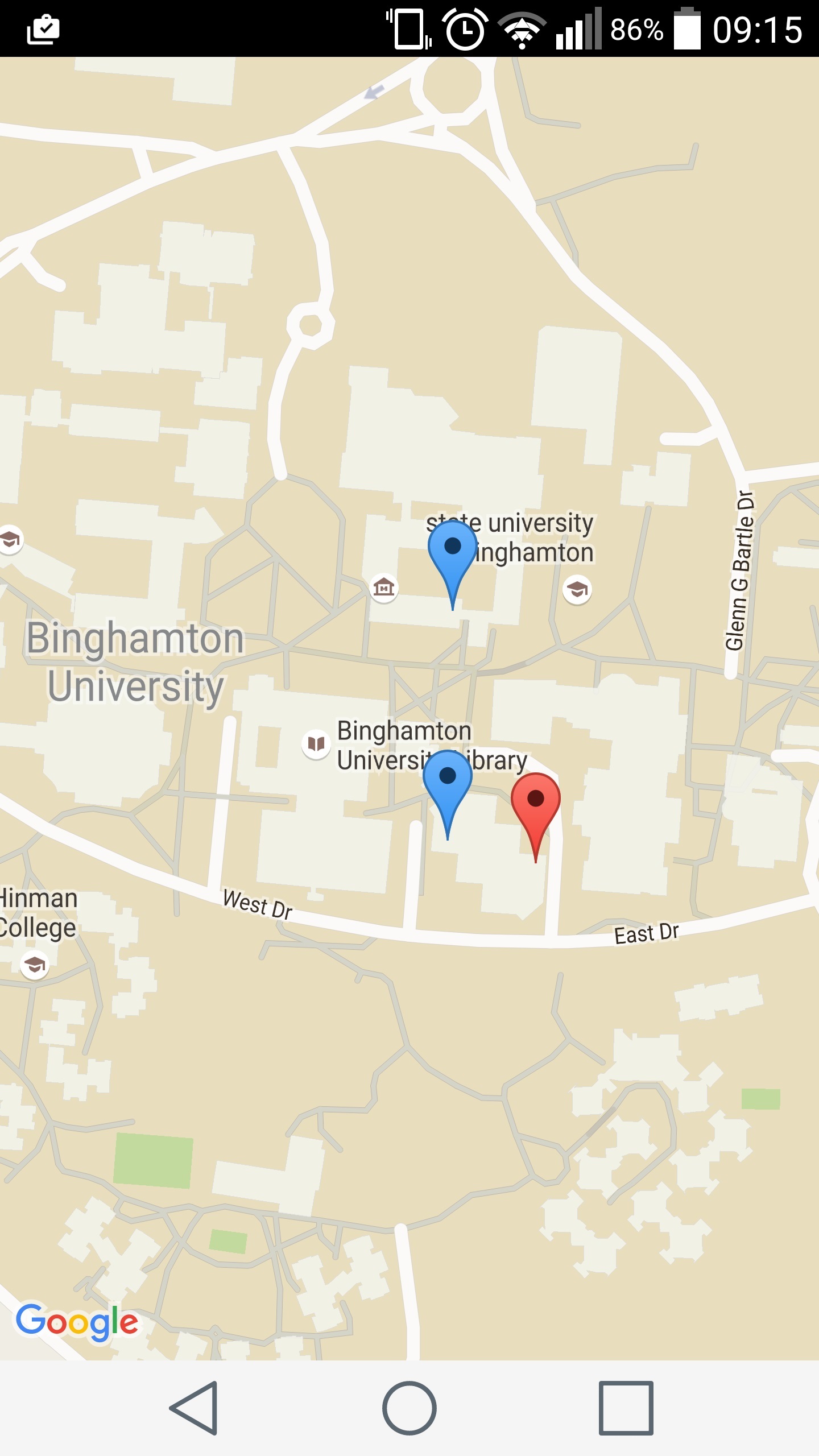

At my first attempt, I added maeker to the every building in school. After clicking the markers, a polyline was drawn from the current location to the selected marker.

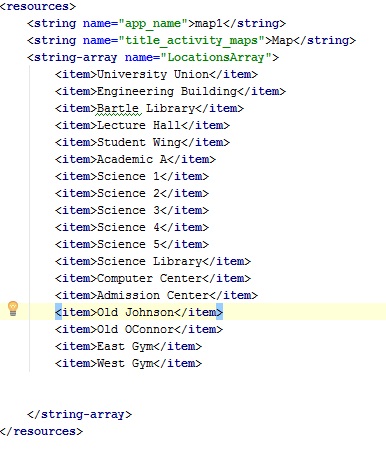

However, these markers was looking pretty confusing and it was easy to touch so that user user may accidentely change her destination. Therefore, I dropped the idea of using permanent markers to point out the buildings. Instead, I decided to implement a top down list, which is going to show the current destination to user. To do that, I implemented the Spinner class in my application. I added each buildings name in the string.xml file of my application so that spinner can access and display them.

After that, I implemented the itemSelected event for each building name in the spinner. When a building name selected, application assign a marker to the building and created a polyline to give a road.

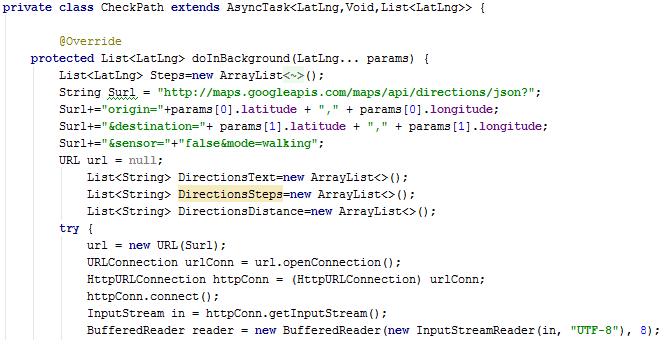

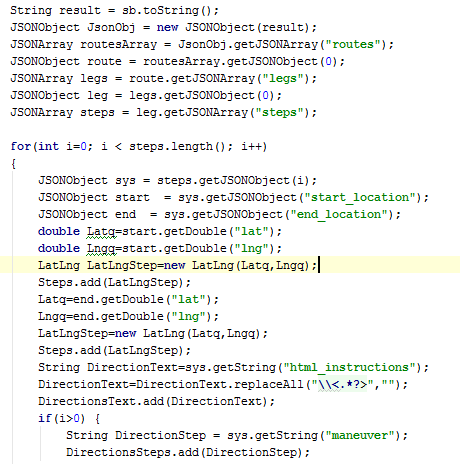

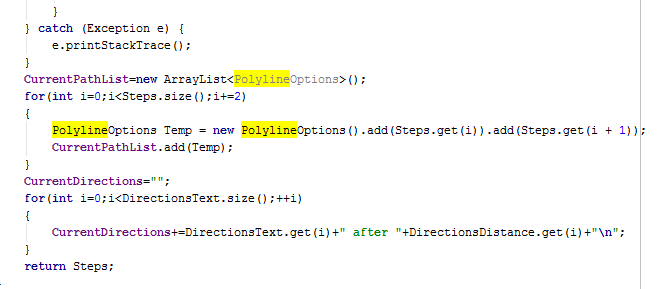

After I adjusted that, I handled the problem with polyline. In ymy first attempts, polyline was given as a direct line from marker to marker, representing the air distance. For my application of course this was useless. Therfeore, I benefited from PolylineOptions and Google Directions API.

Google directions api, provides users to see the distance, the estimated time of arrival and the ways to access destination in HTML. I needed to display the steps and the the walking way of the page, therefore, I parsed the HTML using JSON. I holded the parsed information in related lists and display the list elements in my application. I used three lists: DirectionsText, DirectionSteps and DirectionDistance. DirectionStep is responsible for holding every step user has to follow in order to reach the destination. DirectionDistance is for letting user know how many feet she is going to walk for each step.

Last step of map application was adding voice explanations to my application. To do that, I benefitted from Android's class TextToSpeech. And used insatances of this class to speak out the directionsi current location and building list.

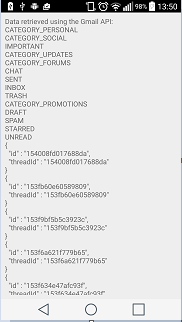

BMAIL

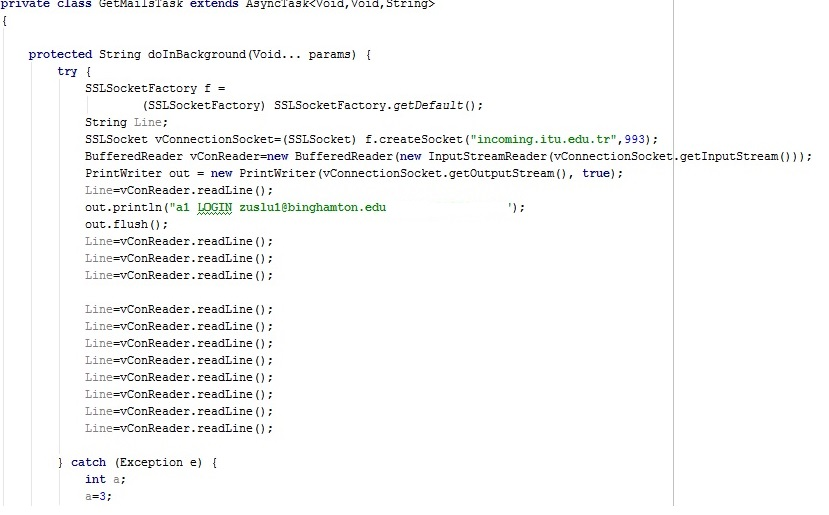

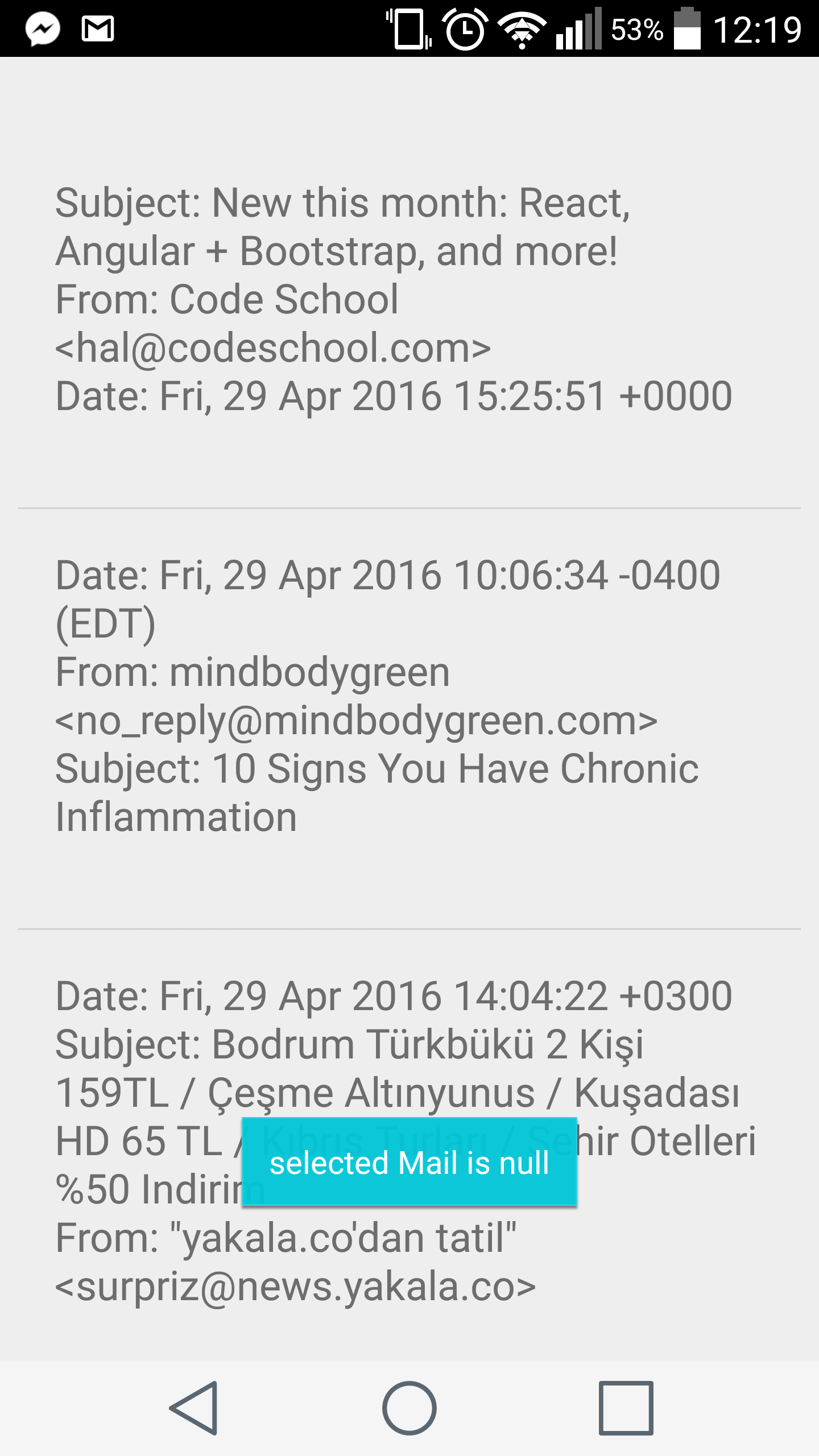

Bmail application is designed for users to access their inbox and and see their new emails. This application is completed by using Google gmail API and Android TextToSpeech features. Due to the API, before I started to develop the application, I created an API key in Google Developer console and record the name of the application with the key. In my first proyptype, I manage to access the name of the mail boxes in my gmail account.

Unfortunately, I could not access the content of the mailboxes. Therefore, I implement sockets to access the emails in the inbox. In order to implement sockets, I implemented java's socket libraries. I created a socket connection between the mail server using SSLSocket class. To access the email conetent, I implemented a BufferReader object and access the cone-tent with StreamReader. After fetching the content, I printed out by a PrintWriter object.

I also implemented a function to respond to click on emails. So that when user select the email, it is going to access the intented email and going to read it to the user.

General view of the application

BLACKBOARD

In order to complete this app, I used WebView class of android. By using this class, I managed to direct to users to Binghamton Blackboard's announcement page. The reason I dont display the other facilities of the blackboard is to prevent users to get overwhelmed with the application. Since announcement page is going to involve every news and updates from each class, users are going to be able to keep up with their classes.

I accessed to the Blackboard Announcement HTML page and parse the page by headers to divide it by each announcement. I holded the announcements by a string list in the Abdroid project. I used two lists: Header and Content. As you can understand from the names of the lists, they are responsible for seperately holding the header and content of the HTML page. This part is responsible for reading the announcements I also used TextToSpeech class of Android to read the announcement out loud to the users.

Problems

The only problem with the application is that it cannot be stopped without leaving the application. Although I added the a button for user to stop reading the announcement when they are done, it still keeps going to read them out loud.